AirPixel camera tracking

1、 Company Introduction

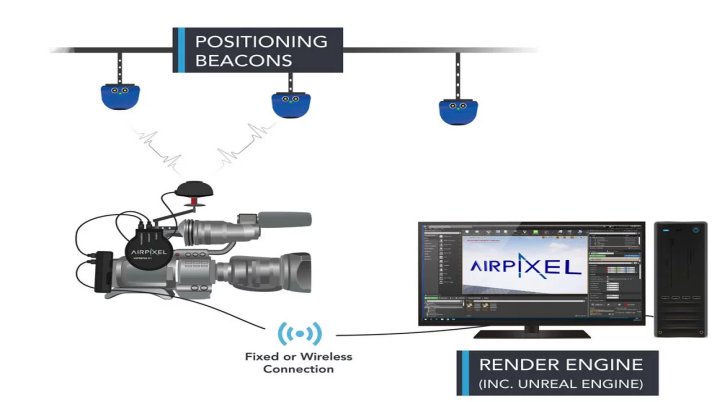

Airpixel is a camera tracking system solution developed by Racelogic for broadcasting, live events, and virtual production. Its technical working principle is very similar to GPS, with each beacon acting as a GPS satellite, and the beacon (Becons) communicating with the receiver (Rover) through ultra wideband. Based on communication with the beacon, the receiver can accurately calculate the position of the camera in real time.

2、 Introduction to Airpiexls System

In order to track Skycam or other freely moving cameras (such as Stanikon), Racelogic has developed a system called AirPixel. This is a system that combines ultra wideband (UWB) radio signals with inertial sensors. The principle of AirPixel is similar to a GPS system, where the receiver calculates its position and direction by communicating with multiple satellites. As for AirPixel, the receiver is called a "mobile station" (Rover), installed on the camera, and the satellite is called a "beacon" (Becons), installed around the sports field. Due to not relying on encoders or optical tracking, AirPixel is not affected by changes in lighting or weather conditions. The combination of UWB and inertial sensors can accurately track all camera movements over a large range, ensuring accurate data for the entire stadium. In addition, AirPixel is directly integrated with the camera to capture all focus, aperture, and zoom values, so that the graphics can interact with the motion generated by the camera and its physical motion. AirPixel seamlessly integrates with Unreal Engine and its popular rendering solutions, such as Pixotope, Noise d3, Zero Density, and MotionBuilder. The data is output using AirPixel or FreeD protocol.

FreeD protocol output

3、 Product application

1. Large sports events, this product has been applied to the 2023 NFL Major League Baseball Super Bowl

RACELOGIC works closely with Fox, Sony, Skycam, and Silver Spoon Animation to integrate AirPixel into existing workflows.

During the preparation process for the 2023 Super Bowl, various teams jointly developed and tested the system at various sports venues in the United States.

Virtual billboard displaying team logo

Skycam is flying over the competition venue

Composite image with virtual elements and input from the original camera side by side

AirPixel receiver installed at the bottom of SkyCam

2. Film Studio Shooting

DNEG Virtual Production is a partner of DNEG and Dimension Studio, utilizing the new AirPixel ultra wideband camera tracking solution for wide-area shooting for the first time. AirPixel brings many benefits, including the ability to view the final scene (real-time synthesis of camera shots+rendering of illusory graphics) without waiting for post production editing.

3. Advertising shooting

The ARRI stage in Uxbridge features 12 UWB beacons placed at different positions on the top and sides of the wall. The AirPixel system on the camera is measuring the position and rotation of the camera and sending it to the Unreal Engine, which then generates the graphics presented on the wall. When the camera moves in front of the car, the perspective of the trees changes, giving the impression of a realistic 3D environment from the camera's perspective. The in machine effect is real-time and very realistic, saving a lot of post production time and having many obvious benefits for cost and workflow.

4. Drone tracking

An indoor positioning technology for tracking drones was demonstrated for the National Nuclear Laboratory at the Energus building in West Cumbria. This is video and real-time data (visualized using Unreal Engine). We have set up 12 UWB Beacons outside the atrium

4、 Product features

AirPixel can be used in conjunction with many camera systems and has been used in mobile photography vehicles, Stanikon, booms, cranes, and cable cameras. It can also work under any lighting conditions, including complete darkness. In addition, we also integrate with popular third-party products to provide the best solution for your needs. This can provide accurate data on lenses that are traditionally difficult or impossible to track.

产品详细信息

X. Y accuracy 2cm, Z accuracy 5cm |

The tilt and roll accuracy is 0.2 °, and the translation accuracy is 0.5 ° |

The default delay of the rendering engine is 50ms |

Small size, sturdy and durable, low power. IP67 waterproof+beacon • One Rover, processing module, and AirPixel control unit per camera |

Can choose to track up to 5 cameras or objects using a single system |

Equipped with advanced proprietary Kalman filters to reduce interference and errors in data |

The AirPixel control unit is connected to the rendering engine via RS485 or Ethernet |

Ability to use AirPixel proprietary data or FreeD D1 protocol |

At least 8 beacons should be installed at each location, and a maximum of 250 beacons can be installed |

Based on a 30 meter Beacons interval, the continuous coverage area exceeds 176000 square meters. |

The beacon placed on a tripod around the volume can run within an hour. |

The initialization of Rover power on can be completed in less than 2 minutes. |

Temporary soaking effects between 15 centimeters and 1 meter. Test duration 30 minutes |

(Recommended configuration includes at least 12 Bacons and 1 Rover)